NVIDIA: How Deep Learning Will Help Your Smartphone Track Your Gaze

OREANDA-NEWS. August 31, 2016. From diagnosing certain mental disorders to optimizing the placement of images in textbooks, eye tracking is useful across a variety of fields: psychology, medicine, advertising, marketing and more.

Scientists and researchers can learn a lot from understanding where people look and why. But making eye tracking easy and ubiquitous has been hard. Deep learning and NVIDIA GPUs are changing that.

Tapping Mobile’s Reach

Given its potential, it’s nagged researchers that getting one’s eyes tracked wasn’t easier. “It was quite shocking to me that we all don’t have eye-trackers,” says Aditya Khosla, a graduate student in the computer science and artificial intelligence laboratory of MIT’s electrical engineering and computer science department.

Khosla and a team of six other researchers from the University of Georgia and the Max Planck Institute of Informatics in Saarbruecken, Germany, set out to achieve a straightforward goal: create eye-tracking software that could run on any mobile phone with a camera.

The combination of powerful mobile technology and the ability to reach a huge number of users was irresistible to the team.

“If you need a big piece of equipment in a lab to do eye-tracking, then you can only reach a small audience,” says Kyle Krafka, a software engineer at Google who was finishing up his graduate computer science degree from the University of Georgia when the project began.

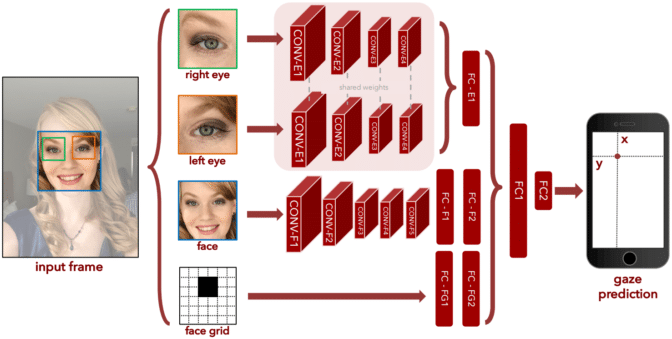

GPUs figured prominently in the team’s work, which relied on the NVIDIA GeForce GTX TITAN X in combination with the Caffe deep learning framework, for both training and inference of the neural network, which the team dubbed iTracker.

Krafka said the TITAN X NVIDIA’s GPU Grant Program donated to the project, enabled him and Khosla to use parallel processing to run through hundreds of models, which wouldn’t have been possible on CPUs.

“It allowed us to experiment rapidly, try new ideas and find out what worked and what didn’t,” Krafka says.

Data, and Then Some

But to train iTracker the team needed data. They took a novel approach to getting it: using Amazon Mechanical Turk, a sort of artificial intelligence crowd-sourcing marketplace. It allowed them to collect a much larger dataset than they could have through a traditional lab approach.

“Finding a way to make participation easy helped fuel the dataset, which fueled findings,” says Khosla. Using Amazon Mechanical Turk, the team was able to accumulate an eye-tracking dataset on nearly 1,500 participants — 30 times as many as any previous studies.

That ground-breaking dataset was then used to train iTracker. Powered by the TITAN X, the training demonstrated that iTracker can run in real time on a mobile device. And it improved accuracy by a large margin over previous approaches.

The team is working on an app but, Khosla says, the group hasn’t decided whether to commercialize the technology. In the meantime, he says they’re planning to open source the work to the developer community and see what results.

For more details, check out the project website.

Комментарии